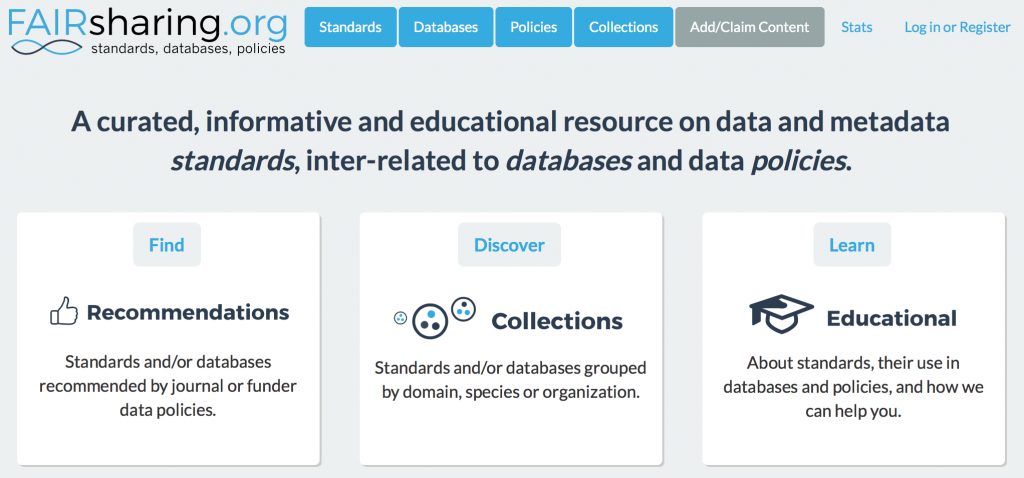

The site fairsharing.org is a repository of information about FAIR (Findable, Accessible, Interoperable and Reusable) objects such as research data.

A project to inject chemical components, rather sparse at the moment at the above site, is being promoted by workshops under the auspices of e.g. IUPAC and CODATA and the GO-FAIR initiative. One aspect of this activity is to help identify examples of both good (FAIR) and indeed less good (unFAIR) research data as associated with contemporary scientific journal publications.

Here is one example I came across in 2017.[1]. The data associated with this article is certainly copious, 907 pages of it, not including data for 21 crystal structures! The latter is a good example of FAIR, being offered in a standard format (CIF) well-adapted for the type of data contained therein and for which there are numerous programs capable of visualising and inter-operating (i.e. re-using) it. The former is in PDF, not a format originally developed for data and one could argue is closer to the unFAIR end of the spectrum. More so when you consider this one 907-page paginated document contains diverse information including spectra on around 60 molecules. Thus the spectra are all purely visual; they are obviously data but in a form largely designed for human consumption and not re-use by software. The text-based content of this PDF does have numerous pattens, which lends itself to pattern recognition software such as OSCAR, but patterns are easily broken by errors or inexperience and so we cannot be certain what proportion of this can be recovered. The metadata associated with such a collection, if there is any at all, must be general and cannot be easily related to specific molecules in the collection. So I would argue that 907 pages of data as wrapped in PDF is not a good example of FAIR. But it is how almost all of the data currently being reported in chemistry journals is expressed. Indeed many a journal data editor (a relatively new introduction to the editorial teams) exerts a rigorous oversight over the data presented as part of article submissions to ensure it adheres to this monolithic PDF format.

You can also visit this article in Chemistry World (rsc.li/2HG7lTk) for an alternative view of what could be regarded as rather more FAIR data. The article has citations to the FAIR components, which is not published as part of the article or indeed by the journal itself but is held separately in a research data repository. You will find that at doi: 10.14469/hpc/3657 where examples of computational, crystallographic and spectroscopic data are available.

The workshop I allude to above will be held in July. Can I ask anyone reading this blog who has a favourite FAIR or indeed unFAIR example of data they have come across to share these here. We also need to identify areas simply crying out for FAIRer data to be made available as part of the publishing process beyond the types noted above. I hope to report back on both such feedback and the events at this workshop in due course.

References

- J.M. Lopchuk, K. Fjelbye, Y. Kawamata, L.R. Malins, C. Pan, R. Gianatassio, J. Wang, L. Prieto, J. Bradow, T.A. Brandt, M.R. Collins, J. Elleraas, J. Ewanicki, W. Farrell, O.O. Fadeyi, G.M. Gallego, J.J. Mousseau, R. Oliver, N.W. Sach, J.K. Smith, J.E. Spangler, H. Zhu, J. Zhu, and P.S. Baran, "Strain-Release Heteroatom Functionalization: Development, Scope, and Stereospecificity", Journal of the American Chemical Society, vol. 139, pp. 3209-3226, 2017. https://doi.org/10.1021/jacs.6b13229

Tags: above site, chemical components, Findability, Human behavior, Information, Information architecture, Information science, Institutional repository, journal data editor, Knowledge, Knowledge representation, Open access, Open access in Australia, Oscar, PDF, recognition software, Technology/Internet, Web design

Hi Henry, I liked the RSC article though it seemed strange that the “house style” required conversion of FAIR to Fair (this despite the presence of HTML, DOI, NMR and a few other allcaps acronyms).

But in your discussion of spectroscopy you described a set of data as “FAIR” despite requiring a cryptographic license key. I would disagree; while this may meet the FAR of FAIR, it’s not interoperable as it requires a particular vendor’s software. Unless I misunderstood, I can’t write software to read it myself.

NMR raises a number of interesting issues. At one end, there are the advocates of using JCAMP-DX, which is an (old) standard for inter-operable data, but which is relatively poorly supported in terms of modern software and which in many ways lags modern pulse sequence experiments. It is also very lossy, since the FID is thrown away to create a JCAMP file. At the other end are the FIDs themselves, along with the necessary instrumental settings needed to process them into frequency domain spectra. To do this one does need much more sophisticated software and such software is really only available via instrument vendors nowadays. So to gain access to the FULL (lossless) data, one also needs to gain access to that software. One vendor (MestreLabs) has implemented a system in which the full capability of their software is unlocked using the cryptographic license key included with the dataset and the software itself is available as a free download.

If you investigate the datasets alluded to in the examples I gave, you will find the full dataset includes a ZIP archive of the instrumental data as it emerges from the spectrometer, along with a second set where it has been transformed into an .mnova file along with the cryptographic license key. The interested reader then DOES have a choice; acquire the unprocessed raw instrumental data and then identify any program that they think might be able to process it, or acquire the .mnova version knowing that they can acquire MestreNova itself at no cost, using its features in full for that specific dataset.

A few of the depositions ALSO include the JCAMP file for good measure. This freedom of choice on how to analyse the data is what I refer to as FAIR. What is slightly less FAIR is to only have available a partially refined set of data, which restricts any ability to re-apply any transforms of the original data.

Exactly the same issues arise with crystallographic data. Most diffractometers produce a large dataset of raw image files containing diffraction data. The well-known CIF which I also allude to above is a severe reduction of that data into a fully refined model of the atom coordinates. The data reduction is from ~ 1 Gbyte down to ~1 kbyte, a reduction of 1000. A CIF file in fact does NOT allow any new refinement of the data to be done; one has to accept that this refinement is suitably free of error. If the user DOES want to reanalyse the diffraction data, they too will need access to sophisticated and often commercial software, which is easier said than done. So in this regard, the .mnova NMR files could be regarded as FAIRer than CIF files. One could on this basis regard .mnova files + cryptographic license (as a .mpub file) as FAIRer than what is regarded as the crystallographic gold standard.

The computational files are again somewhat similar. In the computational archive, there is a CML file that is fully open, but lossy in terms of the original computation. The .fchk file is much less lossy, but linked to one specific software code (Gaussian) and you do need a suitable program to read that file (Gaussview, which is NOT free or e.g. Avogadro, which is free but which only supports a subset of the data analysis). Again the reader is presented with a choice.

So for all the examples I discussed in the RSC article, there IS a choice on offer, and it is that choice that makes the collection FAIR.

I think for each type of data aspiring to be FAIR, it would be good to identify that choice for the user. This is the challenge the community faces.